“Prosthetic limbs have changed very little in the past hundred years,” observes Dr. Kianoush Nazarpour of Newcastle University.

Yes, some advancements help amputees feel more comfortable with their prosthetic limbs. But the real challenge is for prostheses to truly mimic real limbs.

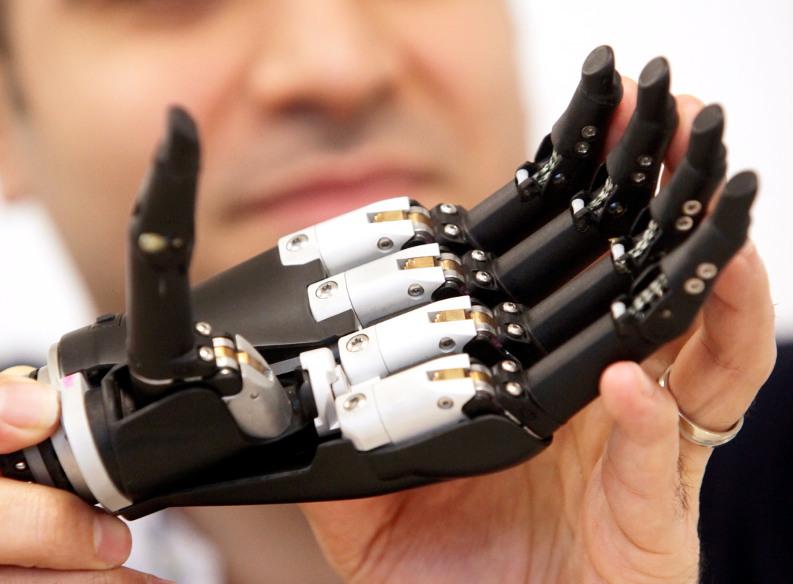

That’s why Nazarpour’s new bionic hand may be a game-changer for the field. His invention effectively sees and reacts to the surrounding environment, hence its moniker as an “intuitive” hand.

The Limits of Traditional Prosthetic Limbs

Prosthetic limbs were once cold, lifeless things. A lost leg became a strap-on stump or a wooden peg. A severed hand became a chilly, brass replica. These prostheses could hardly replace warm flesh and blood limbs.

Even in recent years, prostheses have made only limited progress. A wooden peg leg becomes a metal extension, and some advances in nerve connectivity allow amputees to move prosthetic hands. But even these advances remain clunky in practice, Nazarpour explains, and the response time can be painfully slow.

So Dr. Nazapour has unveiled a new “intuitive” hand. This bionic limb with camera-based technology marks a major leap forward in the development of prosthetics.

“Responsiveness has been one of the main barriers to artificial limbs. For many amputees, the reference point is their healthy arm or leg, so prosthetics seem slow and cumbersome in comparison. Now, for the first time in a century, we have developed an ‘intuitive’ hand that can react without thinking.”

Kianoush Nazarpour Unveils “Intuitive” Prosthetic Hand

Nazarpour, who grew up in Iran, wanted to mimic the automatic responsiveness of a real limb. What if a bionic prosthetic hand could actually see what it was attempting to pick up? A real hand, after all, uses the sight of the individual as a guide and will reach out to grasp an object practically without thought.

Nazarpour realized that the same principle could apply to prosthetics. Recently featured in The Journal of Neural Engineering, his technology uses a camera, computer vision, and artificial intelligence to allow the bionic hand to react within milliseconds and perform four different grasping motions.

An amputee using Nazarpour’s hand can pick up a coffee mug, operate a TV remote, and even pinch-hold smaller objects like a slip of paper or a shoelace – all in a smooth, hiccup-free motion.

“The user can reach out and pick up a cup or a biscuit with nothing more than a quick glance in the right direction,” Nazarpour says.

Kianoush Nazarpour’s Prosthetic Hand Can Grasp and Feel

Perhaps most notable about the new technology is what it does not do. Specifically, the hand never downloads unique responses based on the objects it comes across.

The hand does not have to recognize objects as a “cup” or a “spoon” or a “TV remote,” with programmed response for each. Instead, it maps the shape of the object on the fly and reacts accordingly.

“The computer isn’t just matching an image, it’s learning to recognize objects and group them according to the grasp type the hand has to perform to successfully pick it up,” explains Ghaza Ghazaei, a PHD candidate at Newcastle who helped develop the hand’s artificial intelligence. “It is this which enables the hand to accurately assess and pick up an object which it has never seen before – a huge step forward in the development of bionic limbs.”

Nazarpour’s team plans to further develop the hand by integrating it directly into the user’s nervous system, attaching sensors to nerve endings in the amputated area of the limb. In this system, users will be able feel what they are grasping: temperature, pressure, and even texture. The ultimate goal is for the brain to process such stimuli no differently than it would sensations from a real limb.

That may sound like something out of a sci-fi movie, but it reflects the years of work Nazarpour and his team have invested in order to bring this project to life. Or as Nazarpour humbly states, “It’s a stepping stone.”